CIO Warning: Your AI Agent Strategy Will Fail Without a Knowledge Layer

The enterprise world is buzzing with the promise of agentic AI. With the AI agent market projected to surge from approximately $7.8 billion in 2025 to over $52 billion by 2030, the race to deploy autonomous AI agents capable of reasoning, planning, and acting on behalf of organizations has never been more intense.

According to Gartner, over 40% of agentic AI projects will be canceled by the end of 2027 due to escalating costs, unclear business value, or inadequate risk controls. A separate MIT study paints an even bleaker picture, finding that 95% of enterprise AI projects fail to move from pilot to production. These are not marginal setbacks; they represent billions of dollars in wasted investment and, more critically, lost strategic opportunity.

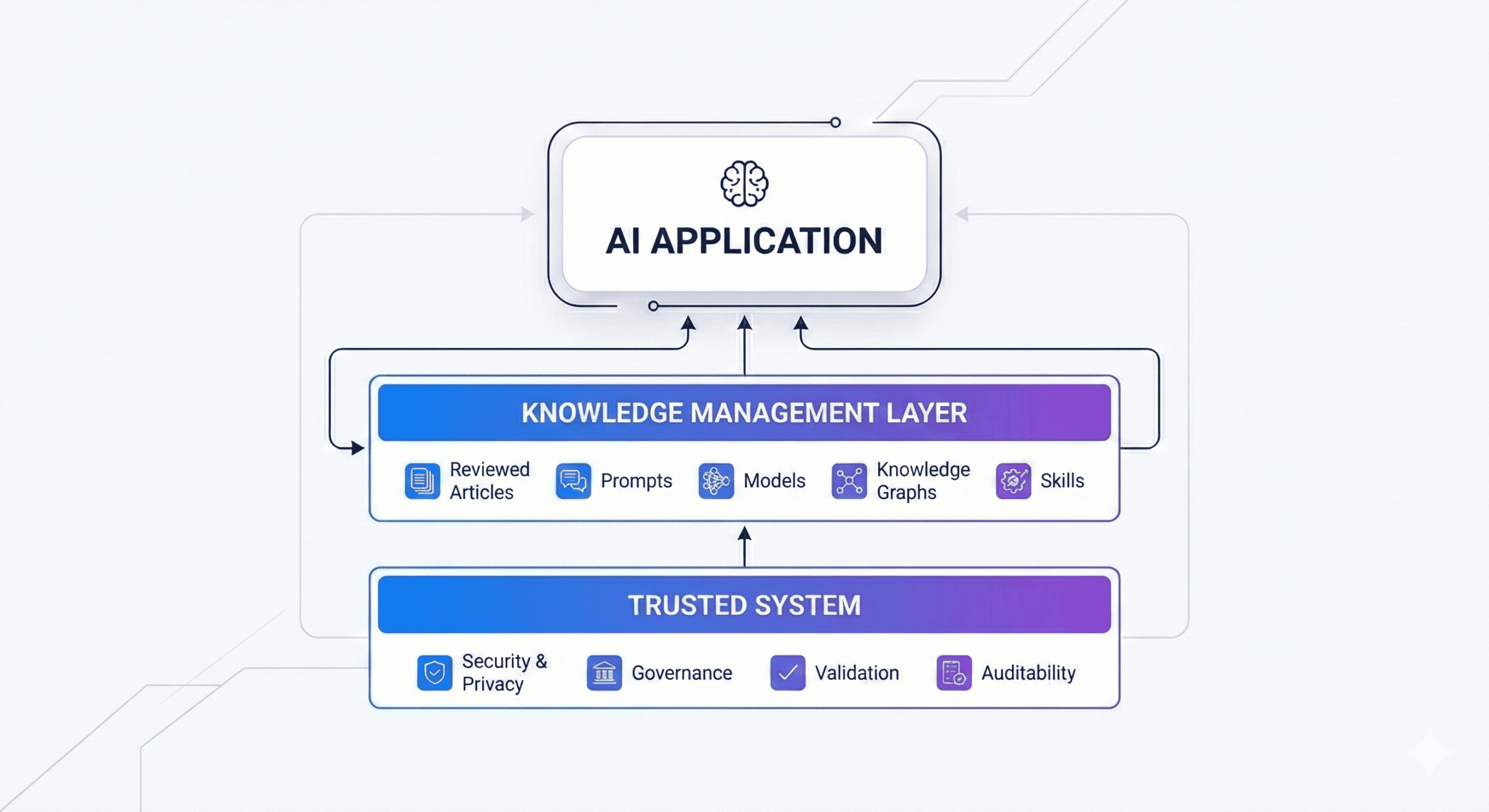

For Chief Information Officers, the question is no longer whether to pursue AI agents, but how to ensure they succeed. The answer, as this article will argue, is not about choosing a better model, a larger context window, or a more sophisticated orchestration framework. The fundamental reason these expensive, high-stakes initiatives are failing is far more foundational: the absence of a dedicated, intelligent, and governed knowledge layer between an organization’s data and its AI applications.

The Core Problem: It Is Not the Model, It Is the Knowledge

The prevailing narrative in enterprise AI focuses on model capabilities—reasoning power, token limits, and benchmark scores. But for organizations that have moved past the proof-of-concept stage, a different reality emerges. The major bottleneck is not the intelligent agent itself; it is the quality, structure, and governance of the knowledge that agent is given to work with.

A 2026 Celonis survey of over 1,600 global business leaders confirmed this directly: the top two barriers to AI adoption were a lack of internal expertise (47%) and the difficulty of getting AI to understand business context (45%). A striking 82% of decision-makers stated outright that AI will fail to deliver return on investment if it does not understand how the business actually runs.

This is not a model problem. It is a knowledge problem. And it manifests in three critical ways that CIOs must understand.

Knowledge Fragmentation

Enterprise knowledge is not a monolith. It is scattered across countless systems—CRMs, ERPs, data lakes, ticketing platforms, internal wikis, document repositories, and the uncaptured expertise residing in employees’ heads. An AI agent without a unified view of this landscape is operating with blinders on. It may retrieve a policy document from one system that contradicts the pricing rules stored in another, or it may lack the contextual understanding of a customer’s history that spans three different platforms. The result is incomplete, inconsistent, or outright incorrect actions taken with the confidence and speed of automation.

Knowledge Drift, Decay, and Hallucinations: A Governance Failure

Business knowledge is inherently dynamic—policies change, product specifications get updated, regulations evolve, and customer information shifts. This creates knowledge drift (and eventual knowledge decay): the gradual degradation of information accuracy inside automated systems. When an AI agent relies on stale or inconsistent knowledge, the impact is amplified at machine speed—causing costly errors in pricing, compliance, and customer service. This risk is often underestimated: even a small change in a source document can cascade into dramatically different agent behaviors.

The Fragmented Landscape: Everyone Is Building a Proto-Knowledge Layer

Recognizing the centrality of knowledge, virtually every category of AI technology provider has begun building its own version of a knowledge layer. The result is a fractured ecosystem where each player solves a piece of the puzzle while inadvertently creating yet another silo. Understanding this landscape is essential for CIOs who must navigate it.

Consumer LLM Providers: Memories and Projects

The consumer-facing large language model providers—OpenAI’s ChatGPT, Anthropic’s Claude, and Manus -based applications—have begun to infer and store knowledge from user conversations. OpenAI introduced a “Memory” feature that allows ChatGPT to remember details across sessions, saving them as persistent knowledge about the user. Anthropic’s Claude offers “Projects,” which function as self-contained workspaces with their own knowledge bases and chat histories. These features represent an implicit acknowledgment that raw model intelligence is insufficient without persistent, contextual knowledge. However, this knowledge is unstructured, user-specific, and lacks the enterprise-grade governance—access controls, audit trails, approval workflows—that organizations require.

AI Agent Orchestration Platforms: Knowledge as a Feature Tab

Horizontal orchestration platforms like LangChain and CrewAI have recognized the need for a knowledge base in AI agent workflows. CrewAI, for instance, describes its knowledge system as “a powerful system that allows AI agents to access and utilize external information sources during their tasks”. These platforms enable developers to upload documents, PDFs, and other content to ground their agents. Similarly, heavily funded vertical AI agent companies have followed the same pattern. Platforms like 11x.ai, Artisan, and enterprise giants like Salesforce with its Agentforce product all feature a “Knowledge Base” as a prominent tab or module within their applications.

The common pattern is unmistakable: every serious AI agent platform has concluded that a knowledge base is essential. But building a knowledge base as a feature within an agent platform is fundamentally different from building a knowledge management platform. As the volume of documents and knowledge artifacts scales from dozens to hundreds to thousands, these embedded knowledge bases will buckle under the weight of requirements they were never designed to handle—requirements like multi-stakeholder review workflows, granular access permissions, version management, and cross-application knowledge sharing.

Data Lake and ETL Platforms: Structured Data Without Context

Data infrastructure providers like Snowflake and Databricks excel at storing and processing structured data. However, structured data alone does not constitute knowledge. It lacks the behavioral context—the “how-to”—that an AI agent needs to act intelligently. This is precisely why protocols like the Model Context Protocol (MCP), introduced by Anthropic as an open standard for connecting AI applications to external systems, and the concept of “skills” or “agent operating procedures” have emerged. These constructs attempt to bridge the gap between raw data and actionable knowledge, but they exist as separate layers that must be managed, versioned, and governed independently.

Observability Platforms: Trapped Knowledge

Observability platforms such as LangSmith, Arize AI, and Langfuse play a critical role in the AI agent ecosystem by capturing metadata about every agent interaction: which model was used, what actions were called, what reasoning was applied, and how the agent arrived at its conclusion. This metadata is, in essence, a rich form of operational knowledge. It reveals what works, what fails, and why. Yet this knowledge typically remains trapped within the observability platform, accessible only to the engineering teams that monitor it. It is not integrated into the broader enterprise knowledge fabric where it could inform business decisions, improve agent performance across applications, or be reviewed and approved by domain experts. The observability layer generates knowledge that the enterprise should consume—but currently cannot.

Why These Players Will Struggle to Solve It Alone

The fragmented approaches described above are not merely incomplete; they face structural limitations that will prevent them from evolving into the comprehensive knowledge layer that enterprises need.

Scaling challenges. When an AI agent orchestration platform or a vertical AI SDR tool builds its own knowledge base, it is optimizing for its specific use case. But enterprise knowledge does not respect application boundaries. As the number of documents, rules, and knowledge artifacts grows into the hundreds or thousands, each with its own ingestion rules, update cadences, and access requirements, these platforms will find themselves building an entire knowledge management system from scratch—a system that is not their core competency and that diverts resources from their primary value proposition.

Knowledge is more than documents. The knowledge that AI agents need extends far beyond uploaded PDFs and help articles. It includes the prompts that define agent behavior, the tool definitions (skills) that specify how agents interact with external systems, the business rules that constrain agent actions, and the model configurations that determine which LLM is used for which task. All of these are forms of enterprise intellectual property that require tuning, human review, access management, and version control. An AI agent platform that treats knowledge as a simple document store will fail to manage this complexity.

Multi-user and multi-team complexity. In an enterprise setting, multiple teams and users interact with AI applications simultaneously. The knowledge that is relevant to a sales team’s AI agent differs from what a customer support agent needs, which in turn differs from what an internal operations agent requires. Managing these overlapping but distinct knowledge domains—including personal user context, team-level knowledge, and organization-wide policies—demands a purpose-built platform with sophisticated access controls and knowledge segmentation capabilities.

Review and approval workflows. Knowledge that has not been tested, reviewed, and approved is a liability, not an asset. When a piece of knowledge is found to be incorrect or outdated, the organization needs the ability to roll back to a previous version, trace the impact of the change, and ensure that the corrected knowledge is propagated across all dependent AI agents. These review management capabilities are table stakes for knowledge management platforms but are afterthoughts, at best, for AI agent builders.

The Next Trillion-Dollar Opportunity: Knowledge Management Reimagined

The answer to enterprise fragmentation isn’t a brand-new, unproven technology category. It’s the evolution of a discipline that has anchored enterprise operations for decades: knowledge management. The global knowledge management market is already valued at over $885B and is projected to reach $2.5T by 2030—a trajectory that underscores how central knowledge is becoming in an AI-driven enterprise.

Knowledge management platforms are uniquely positioned to become the central nervous system of the agentic enterprise because they are purpose-built to solve the exact problems that cause AI agent strategies to fail: trust, governance, verification, and operational consistency. As Eva Nahari, Chief Product Officer at Vectara, wrote for Forbes:

Rather than “How do we get better AI models?” the key question for enterprises is, “How do we build the trusted knowledge layer that lets AI act responsibly inside or on behalf of our organization?” Enterprises that invest in not just AI but in knowledge architecture and governance now could gain a compounding advantage in terms of compliance, speed, and adaptability.

This is why the knowledge management category is poised to become the critical gate between AI agent applications and the underlying systems of record. A true knowledge layer can expose verified knowledge to agents, capture metadata and insights generated during agent interactions, route updates through the right stakeholder approval workflows, and feed improvements back into the system—creating a virtuous cycle of continuous knowledge refinement.

Several platforms are evolving in this direction. Notion offers a flexible, collaborative workspace, but without rigorous internal discipline its openness can become knowledge chaos. Guru delivers strong, role-based knowledge distribution, yet can become difficult to manage at enterprise scale without tight governance. Atlassian Confluence is a powerful wiki—especially for development teams—but often becomes another silo and typically lacks the real-time, guided delivery needed for customer-facing AI agents. More mature, enterprise-focused platforms—such as eGain’s AI Knowledge Hub—emphasize KCS v6 verification and a hybrid approach that orchestrates AI with human expertise, reflecting the governance-first operating model required in this new era.

Regardless of platform choice, the conclusion is the same: a dedicated knowledge management layer is no longer optional—it is a prerequisite for making agentic AI reliable in production.

To fulfill this role, knowledge management must expand well beyond traditional help articles, product documentation, and internal wikis. The modern knowledge layer for the agentic enterprise must support a broader set of capabilities—especially skill articles, prompt management, the ability to consume and operationalize AI agent metadata, and multi-layered knowledge graphs that connect content, context, policy, and execution.

The CIO’s Mandate: Build the Knowledge Layer First

The allure of deploying autonomous AI agents is powerful, and the competitive pressure is real—89% of leaders view AI as their single biggest opportunity to compete in the market. But the path to success is paved with discipline, not just technology. The evidence from Gartner, MIT, McKinsey, Celonis, and Deloitte is unambiguous: without a robust, centralized, and intelligently governed knowledge layer, any AI agent strategy is destined to become another failed pilot.

CIOs must resist the temptation to pursue fragmented, application-specific knowledge solutions. The mandate is to step back and invest in the foundational knowledge infrastructure that will enable not just one AI agent, but the entire portfolio of current and future AI initiatives to succeed. This means:

Audit the current knowledge landscape. Map where enterprise knowledge currently resides, identify the silos, and assess the governance gaps. Understand that knowledge is not just documents—it includes prompts, skills, tool definitions, and the operational metadata generated by existing AI systems.

Champion knowledge management as a strategic platform. Elevate knowledge management from a departmental tool to an enterprise-wide strategic platform. Position it as the critical middleware layer between your systems of record and your AI applications.

Demand interoperability. Insist that AI agent platforms, observability tools, and data infrastructure providers integrate with your knowledge management layer, rather than building proprietary knowledge silos.

Invest in governance from day one. Implement version control, access management, review workflows, and audit trails for all forms of knowledge that feed AI agents. The cost of governing knowledge proactively is a fraction of the cost of debugging hallucinations and compliance failures reactively.

The future of the enterprise will not be defined by how many AI agents it deploys or how sophisticated its models are. It will be defined by how intelligently and responsibly those agents can act on trusted, governed, and continuously evolving knowledge. The knowledge layer is not an optional enhancement to your AI agent strategy. It is the strategy.

Comments

Leave a Comment

No comments yet. Be the first to comment!